Road-testing the latest AI prototyping tools

In this article, we’ll explore the fast-evolving world of AI prototyping tools that are rapidly reshaping the industry. We’ll compare some of the leading options to help non-technical product people identify the best fit, and discuss how these tools can be integrated into existing workflows.

Background of prototyping tools

Prototyping is a core part of a designer’s toolkit, essential for validating educated assumptions made during earlier discovery phases. It can be achieved through various methods, some more involved than others, ranging from linking simple static pages together to complex simulations that require real-time facilitator interaction behind the scenes, known as the Wizard of Oz method.

A product team collaboratively decides on the best approach based on what they need to / could learn from the prototype versus the effort required to create it. In essence, weighing the ROI.

More recently, design tooling has evolved to a point where its functionality strikes a sweet spot between effort and reward. Designers can now link static screens and elements with relatively advanced animations and interactions, with Figma currently being the most notable example. While Figma has more recently introduced complex logic-based prototyping features such as direct interactions with forms, data manipulation, and logic-based routing—these advancements come with a steep technical learning curve that deters many designers. As a result, this functionality can fall short of expectations.

Figma’s expansion into logic-based prototyping appears to be an attempt to bridge the gap with long-standing tools like Axure RP, which has been around since 2002. Although Axure RP doesn’t require coding to create logic-based prototypes, users benefit significantly from an understanding of basic web technologies like HTML, CSS, and JavaScript. More recently tools like Framer and Proto.io have also tried to find the right balance. In my experience, technical barriers have led to resistance from both senior leadership who hold the purse strings, and from designers who are less inclined to engage with the underlying mechanics.

But there’s an entirely new workflow emerging that could solve these barriers. One opens up the opportunity to prototype to the wider product team, and promises a compelling balance of speed, ease of use, and technical required capability: AI prototyping. In this article, I’ll take a deep dive into this rapidly evolving space at a pivotal moment when adoption appears to be shifting from ‘innovators’ to ‘early adopters.’ Is this the beginning of a new standard in design workflows, or just a passing trend? Let’s find out.

Classifications of current AI tooling for prototyping

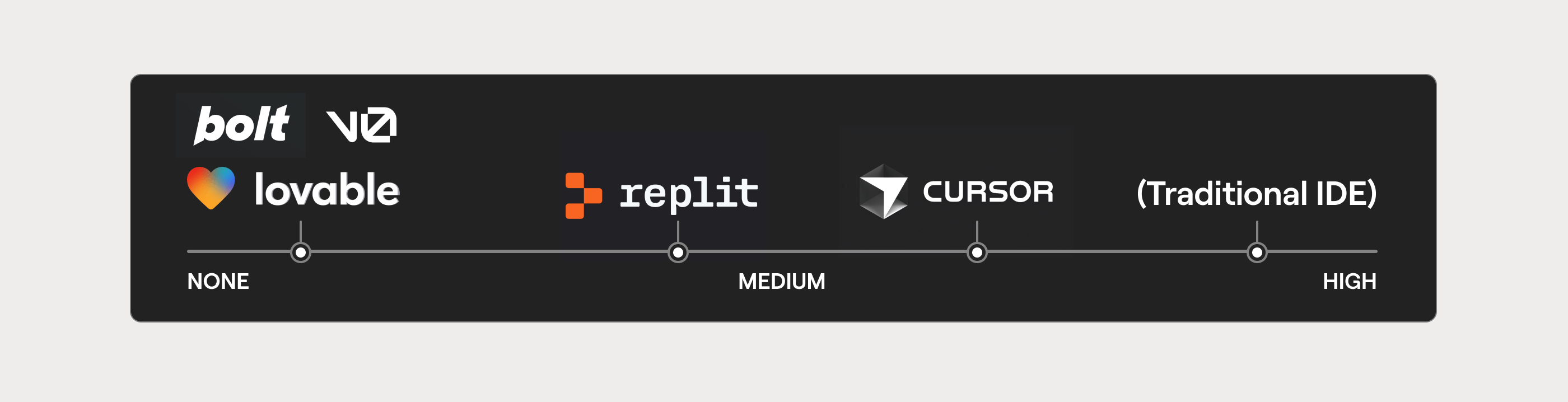

With frenzied horizontal and vertical innovation in this space I’ll do us all a favour and break up the different options into some high level buckets to help guide our exploration.

1. Chatbots

Chatbots like ChatGPT provide a user-friendly interface for a large language model (LLM), allowing users to explore topics in depth, refine content, and generate new ideas, making complex information more accessible and interactive. Because this wrapper is designed to do such a variety of tasks, it isn’t tailored and targeted to provide an optimised experience when it comes to creating mockups and prototypes for digital services based on prompting.

However for non-technical folk there is still value to be found here if it is your only option. It will accept prototype prompts, generate some code and show you an interactive preview in a 2nd pane. You can then prompt it to make amends but it seems to fall over easily and someone non technical soon gets tied in knots. If you can’t get the budget for a more tailored tool don’t discount it though, you can easily share a prototype by generating a public URL which could be used for feedback in a 3rd party setting, but you cannot do more complex processes which we’ll discuss shortly.

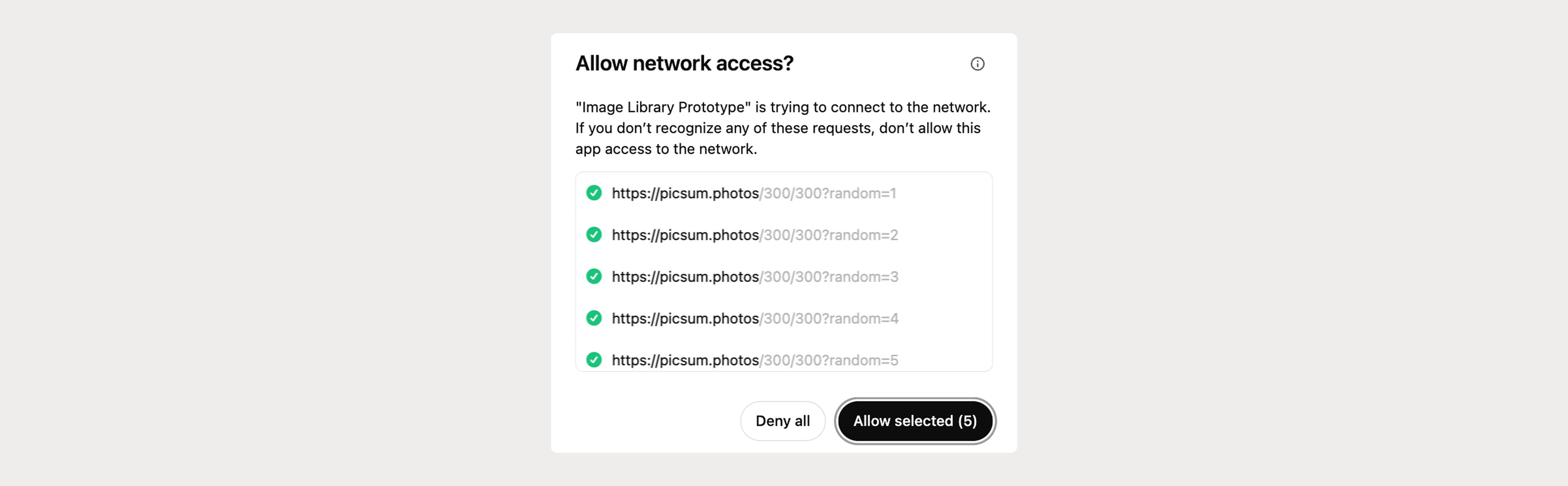

I created a quick test of a small image gallery with a delete function. Feel free to play around with the code or make edits with new prompts. (When you first open it, you’ll need to ‘Allow selected’ first).

For those of you more technically minded, ChatGPT can now interact directly with IDEs on the native version. For example it can operate and edit files directly in VSCode. So in theory you can ask CGPT to generate react components and throw them into an editor. However in this research we’ll be focussing on user types who wouldn't be inclined to work like this (including myself).

2. Prompt based cloud development environments

This is the classification we’ll focus on in more detail throughout the article, as its richer features and usability make it particularly well-suited for non-technical roles. To be clear, these tools aren’t just for creating throwaway prototypes, you can take them all the way, building and deploying full-stack web and mobile apps entirely within the tooling.

With mission statements like “Idea to app in seconds” and bold prompts such as “What can I help you ship?” and “What do you want to build?”, these tools make ambitious promises. But do they deliver?

From a usability perspective, the different tools in this category seem to follow a similar approach to one another, placing a prompt box front and centre to draw users in and encourage them to generate their first iteration as quickly as possible. They also provide pre-defined prompts and templates, offering guidance on direction, technology choices, and UI design for those who need a starting point.

3. Local development assistants (IDEs)

These are a more complex classification, where text prompting plays a secondary role to the traditional coding interface. You begin with a templated file structure, including an index file and its supporting packages, ready to go.

You can call up a question box, select code snippets, and integrate using natural language as you work. If needed, you can provide full prompts to generate more comprehensive code.

Additionally, you can reference other files in the project from within the chat without manually switching between them. And, as you might expect, you must run the code directly within the IDE, viewing your outputs in a web browser just as you would with traditional development tools.

In February 2025 ‘vibe-coding’ was coined to describe the process of an engineer using conversational AI in order to build and interact with code. It will be interesting to see how this concept evolves as these new approaches continue to take shape.

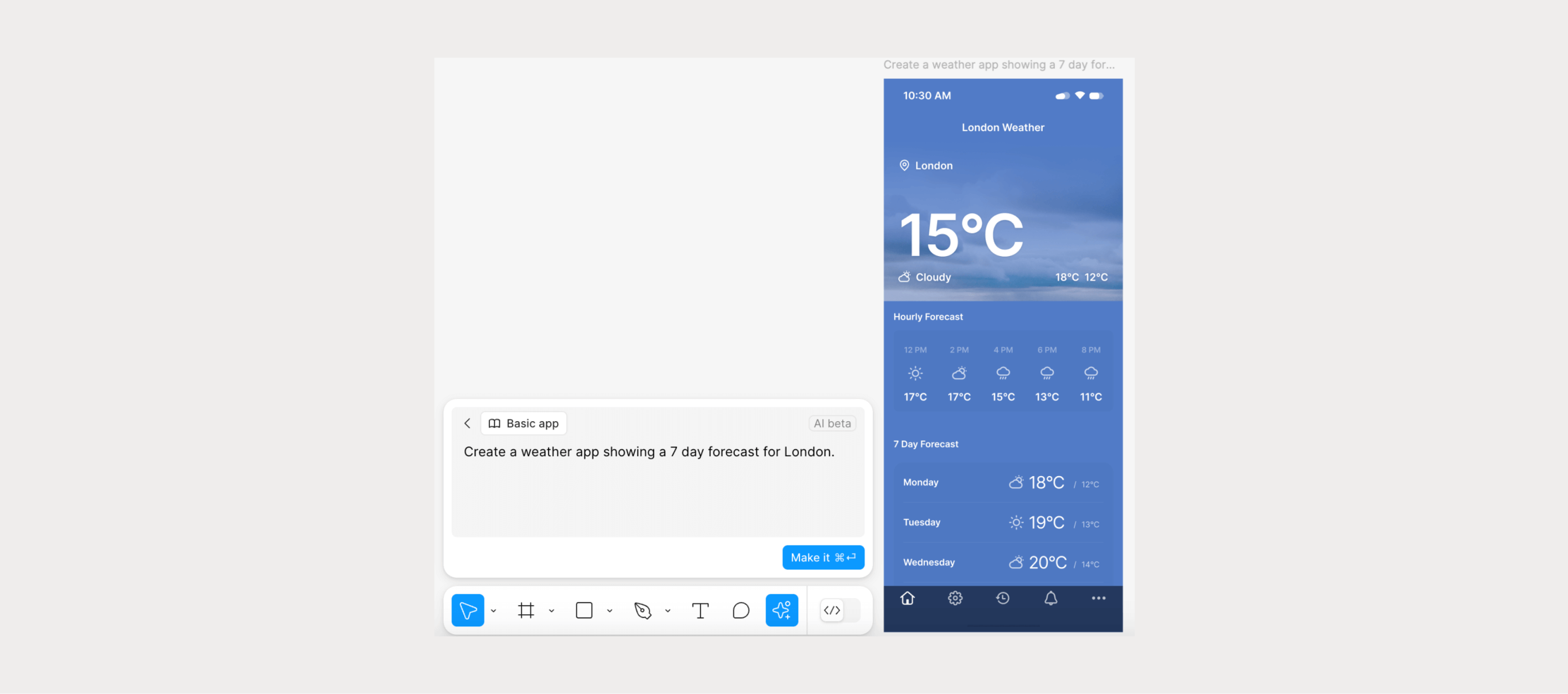

4. In-tool AI supported prototyping (Figma)

The native AI capabilities within our product design tools are also racing to provide users with seamless functionality, improving both efficiency and creativity. In this example, I’ll focus on Figma’s implementations.

Figma AI Beta was announced in June 2024 and rolled out to most users by the end of that year. It offers core features such as First Draft, which builds pages from its component library using text-based prompting. You can then refine these pages further with additional prompts, combined with traditional hands-on editing.

Once you’ve created a set of screens, whether manually or with AI assistance, Figma AI can intuitively establish connections between them, predicting likely user flows. In addition to these two core features, it also includes supporting tools that achieve outcomes such as content rewriting, dummy content injection, content searching, language translation and image refinement.

For now, it serves as a solid option for UI inspiration, page-linking assistance, and content control. However, it still thinks in terms of individual pages rather than broader user flows, meaning it falls short in the UX space. That said, with the rapid pace of AI development, I’d recommend revisiting your tools every three months to see what’s new!

Different users and their ‘Jobs To Be Done’ (JTBD)

We’ve covered the landscape from a really high level and you should have a rough idea of the types of options available to generate an AI prototype. But before we dive into one area in more detail, let’s explore the different types of users of these tools and what their favoured options might be.

A. Product designer

A product designer is likely to be seasoned in their design tool of choice (E.g. Figma). But we’ve seen above, there is definitely value in exploring a cross-tooling workflow between their core design tool (#4) and a prompt based cloud development environment solution (#2). We’ll explore possible workflow options later on to optimise the way work is created, tested and versioned.

B. Wider product people

Some product owners and managers do know how to use some design tools based on their backgrounds but from my experience it isn’t the norm. On top of that, the level of expertise tends to be fairly light, which is expected given they’re not actively keeping up with how the tooling evolves. This lack of in-tool prototyping knowledge could be filled by the prompt based cloud development environment options (#2).

We should also consider roles such as a user researcher wanting to spin up a quick test without being blocked by a designer's time, or a data analyst looking to add qualitative data to their existing quantitative insights, again independently of a designer. There’s a risk it could lead someone to skip steps in a process the whole team agreed on.

C. Early career engineer

I can see a place for both prompt based cloud development environments (#2) and local development assistants (#3) for this type of user. The former could be used for reverse engineering the solutions in a really digestible way. All you need to do is imagine what you’d like to see, then pick it apart in the code viewer. The latter would be great to port learnings over into once comfortable.

D. Seasoned engineer

Local development assistants (#3) look like they're particularly valuable for seasoned engineers working on isolated or solo projects where speed and efficiency are critical. These tools provide familiar development environments enhanced with AI assistants that streamline coding, automate repetitive tasks, and in theory can help tie together complex components with minimal friction. This likely means faster iteration cycles and the ability to explore multiple approaches without getting bogged down in setup, helping engineers move from concept to execution with greater speed. A word of warning however, the level of precision in this space is still debatable, and sometimes produces sub-par code so engineers must remain eagle-eyed if using these tools to generate production ready products. For example they can still hallucinate APIs that don’t exist, particularly on private code that is stored in separate external repos.

A concluding point to note when it comes to user types, is some of these tools allow sideways exporting and uploading so you could establish a workflow where an engineer passes some code from an IDE to a product manager to pick up in a prompt based solution, and the same is true vice versa.

Deep research into AI prototyping SaaS options

Now we’ve had an overview, it’s time to deep dive into some of the market leading prompt based cloud development environment tools (category #2 above) to compare aspects such as usability, functionality and output quality.

Each of these tools currently costs circa $20 a month for a decent chunk of data processing, and are all easily scalable if required.

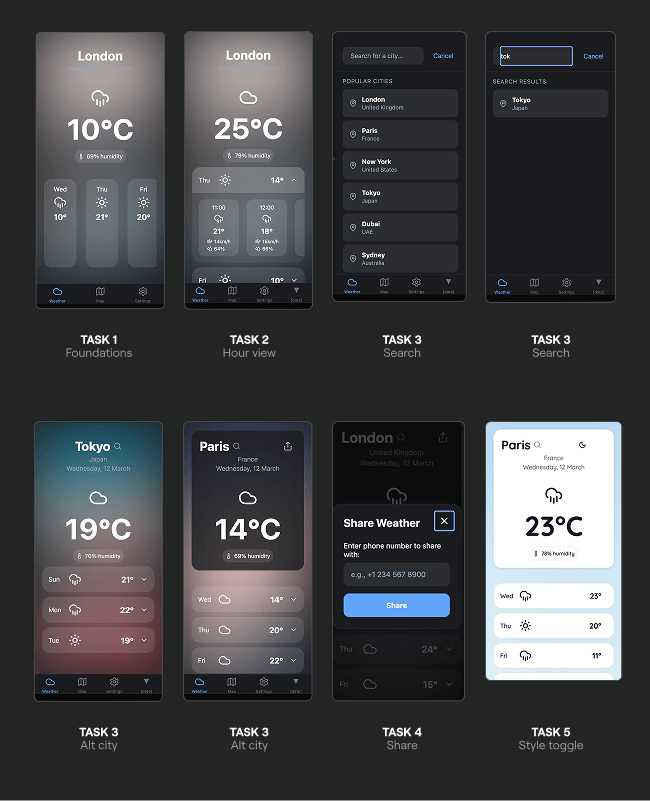

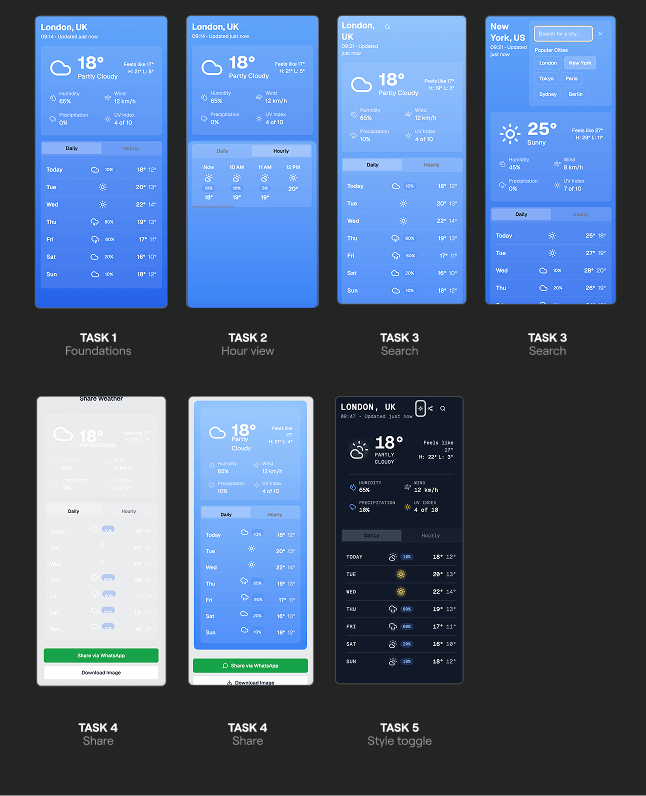

For each prototyping tool I'm going to run the same two scenarios to establish and compare their competency.

Scenario #A - Only text prompting

I will start each prompting with the same wording: “Create a portrait oriented weather app prototype that shows the day by day weather overview for a week in London”

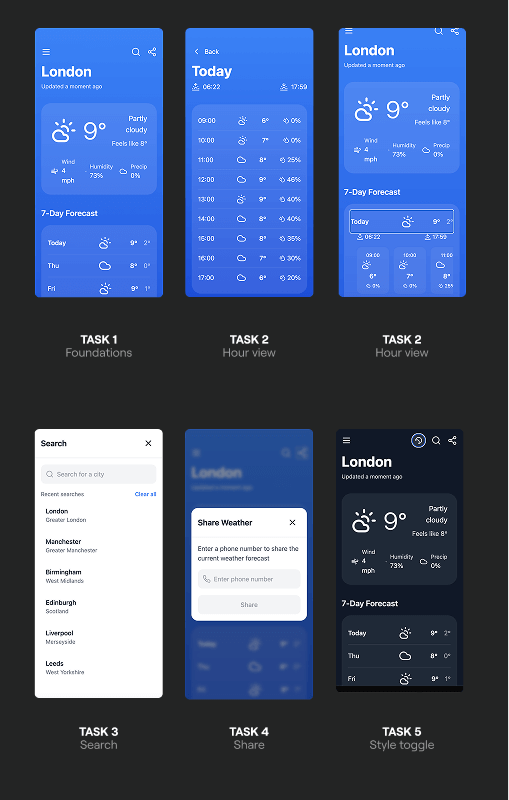

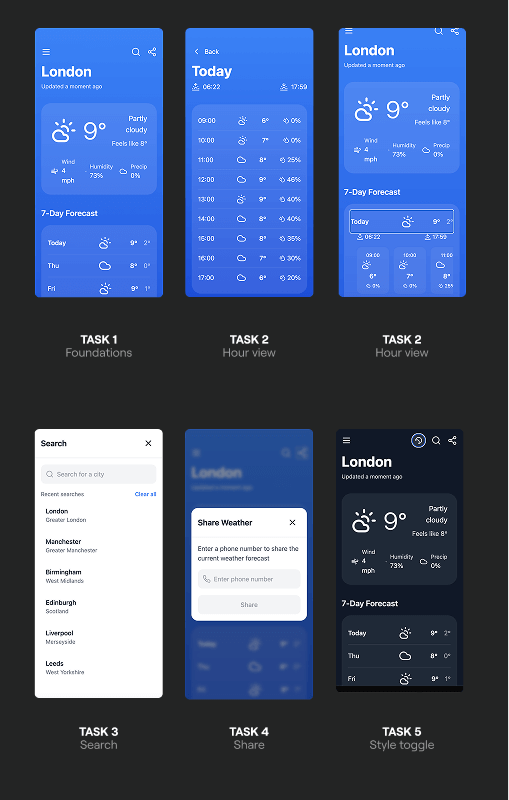

I will then complete the following tasks:

- TASK 1 - Scrollable day by day overview for the next 7 days

- TASK 2 - Detailed view of hour by hour weather fluctuations

- TASK 3 - A search to change to another city

- TASK 4 - A share function to send the report to someone else

- TASK 5 - Add a toggle to switch between styles

Scenario #B - Image reference

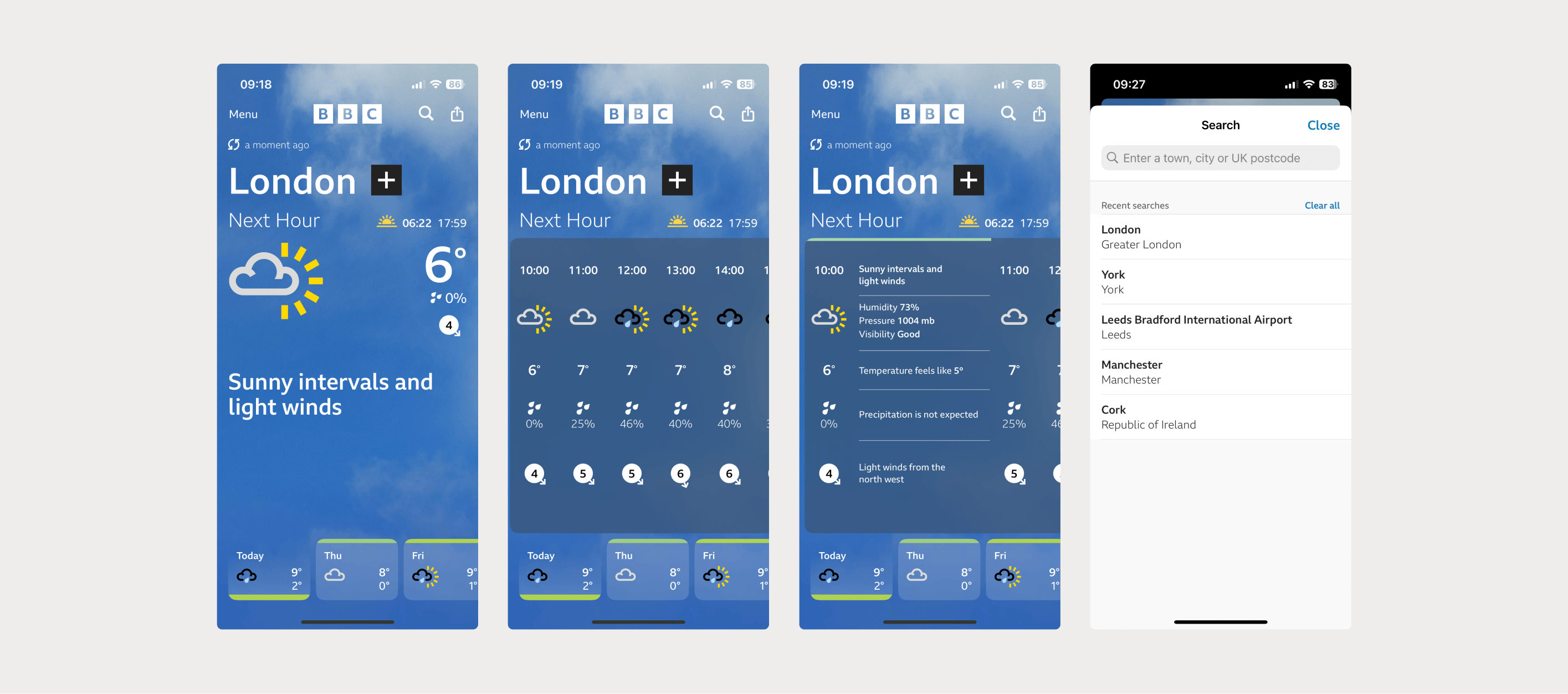

I will feed the tools the following 4 images in a fresh instance and ask the same first question to see if it uses the style, content and UX flows on the images to deliver a more comprehensive first pass of the solution.

Review 1 - Bolt

ChatGPT’s summary of the tool

‘Bolt is a lightweight, prompt-based prototyping tool that lets you create functional UI layouts in seconds. Its strength lies in speed and simplicity—just type what you want, and it instantly generates a web-based interface with working components. It’s ideal for quick experiments, validating ideas, or getting a visual starting point without diving into code or design tools.’

Let’s see if I agree

Task summary

| # | Task | Prompts and Debugs required |

|---|---|---|

| 1 | Scrollable day by day overview for the next 7 days | Prompts x1 / Debugs x 2 |

| 2 | Detailed view of hour by hour weather fluctuations | Prompts x2 / Debugs x 2 |

| 3 | A search to change to another city | Prompts x1 |

| 4 | A share function to send the report to someone else | Prompts x2 / Debugs x 2 |

| 5 | Add a toggle to switch between styles | Prompts x3 |

| TOTAL | 9 Prompts and 6 Debugs |

Results

| Scenario #1 - Only text prompting Project link Prototype link | Scenario #2 - Image reference Project link Prototype link |

|---|---|

|  |

Observations

- The initial build threw up some errors but the debugging always managed to self-assess and fix issues.

- The tool is super user friendly for someone who isn’t technical. I knew it was doing lots of clever things, but I didn’t need to understand how or why unless I wanted to dig more into it.

- The accuracy of the outputs from the prompts was fantastic. Sometimes when I hadn't specified with enough detail how a feature would be interacted with, I could easily amend this with a followup.

- The inspector means you can point prompts at specific points of the prototype to give the AI more context which is super useful.

- The AI adds extra content from within the reference images which can add a bit of noise to the prototype.

- Even when the AI adds some of the icons from the reference images it doesn't add the functionality to them until you add further prompts to do so.

- But using a reference image seems to be a solid way of bringing across styling baselines.

- It is very easy to generate a link to share for user testing. It's also just as easy to share a link to the working file so any external user can check out the code

Review 2 - Lovable

ChatGPT’s summary of the tool

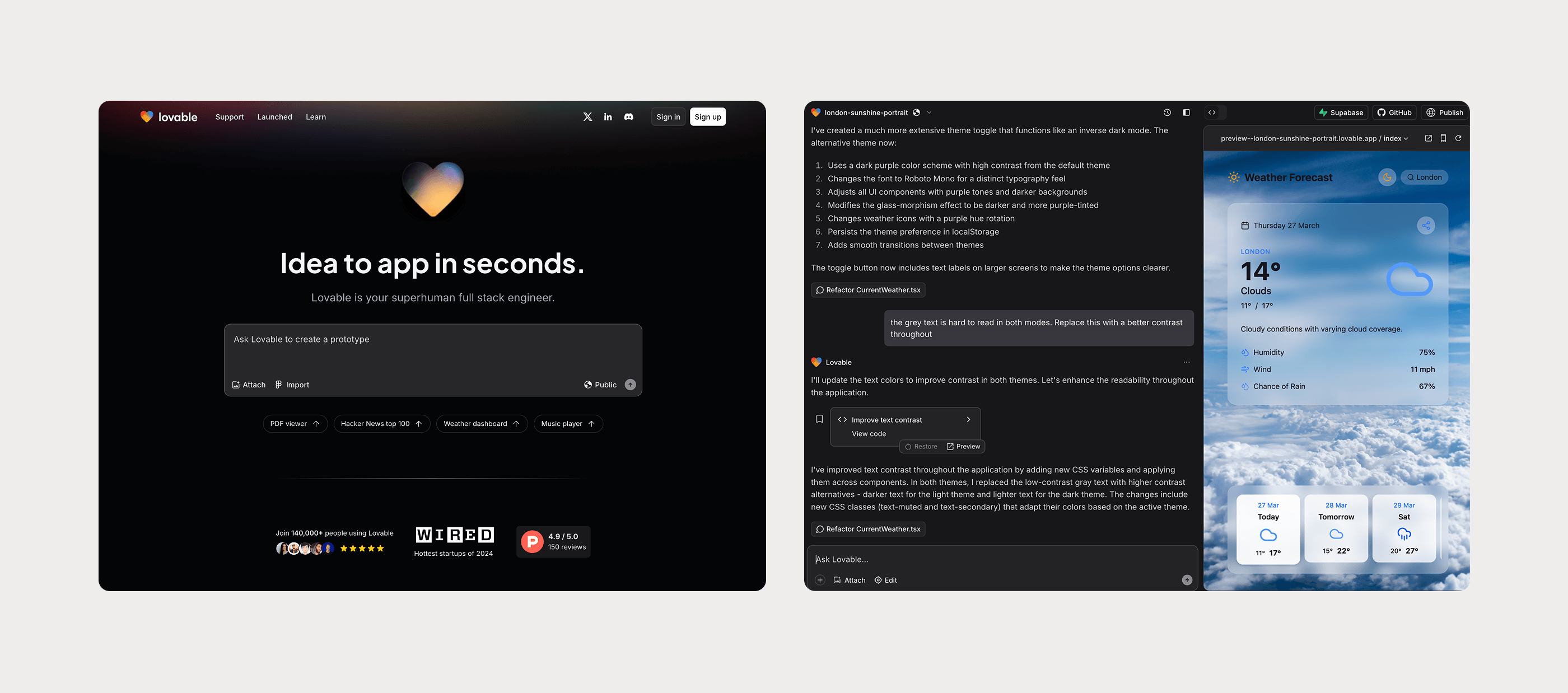

‘Lovable goes beyond interface generation by helping you explore product ideas holistically. It uses AI to generate user flows, wireframes, personas, and even value propositions based on your input. It’s less about pixel-perfect UI and more about early-stage product thinking—great for product teams who want to prototype experiences, not just screen.’

Let’s see if I agree

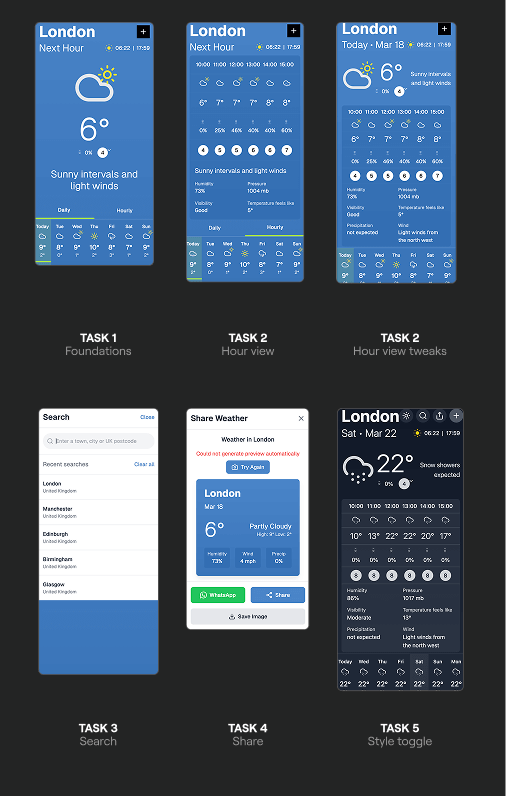

Task summary

| # | Task | Prompts and Debugs required |

|---|---|---|

| 1 | Scrollable day by day overview for the next 7 days | Prompts x2 |

| 2 | Detailed view of hour by hour weather fluctuations | Prompts x3 / Debugs x 1 |

| 3 | A search to change to another city | Prompts x1 |

| 4 | A share function to send the report to someone else | Prompts x2 |

| 5 | Add a toggle to switch between styles | Prompts x3 |

| TOTAL | 11 Prompts and 1 Debugs |

Results

| Scenario #1 - Only text prompting Project link Prototype link | Scenario #2 - Image reference Project link Prototype link |

|---|---|

|  |

Observations

- The usability is almost identical to Bolt, although the code is kept more in the background in Lovable and is accessible if the user would like to see it.

- It was less buggy than Bolt when building the prototype. 1 x debug was required, where as there were 6 during the Bolt build.

- The creativity of the app was better in Lovable even with no reference. It implemented a relevant background image whereas Bolt only used gradients.

- However because it had been more creative in the first instance it struggled more with the dark mode reskin requests and got itself in a bit of a mess. This would have taken a lot of conversational tweaks to fix.

- When using photo reference it got a lot closer to the original than Bolt, but this added extra content into the page that was a bit noisy.

- Lovable didn’t use space within the prototype as efficiently as Bolt at top level. It started stacking content instead of trying to be intuitive with nesting. However within the content blocks it was very efficient with how it displayed complex information.

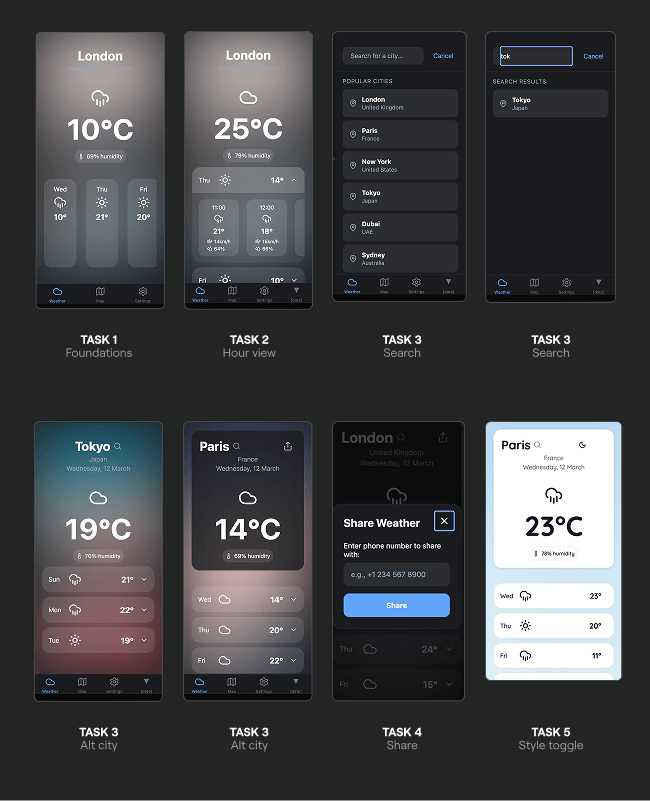

Review 3 - V0

ChatGPT’s summary of the tool

‘V0 by Vercel turns plain-text prompts into clean, editable React components styled with ShadCN UI. It’s a developer-focused tool that excels when you want to quickly scaffold real frontend code from a rough idea. With built-in export options and clean output, v0 is perfect for bridging the gap between concept and shipping code.’

Let’s see if I agree

Task summary

| # | Task | Prompts and Debugs required |

|---|---|---|

| 1 | Scrollable day by day overview for the next 7 days | Prompts x1 |

| 2 | Detailed view of hour by hour weather fluctuations | Prompts x0 |

| 3 | A search to change to another city | Prompts x1 |

| 4 | A share function to send the report to someone else | Prompts x2 / Debugs X3 |

| 5 | Add a toggle to switch between styles | Prompts x1 |

| TOTAL | 5 Prompts and 3 Debugs |

Results

| Scenario #1 - Only text prompting Project link Prototype link | Scenario #2 - Image reference Project link Prototype link |

|---|---|

|  |

Observations

- V0 Doesn't give me the option to display the prototype in a mobile width so I had to view the code in a new window and use inspector to reduce its width appropriately.

- This was the first tool to add hourly weather without a separate prompt (nice!).

- Although there was less flourishes, it delivered the most cohesive and usable result with the first prompt with regards to layout and content.

- V0 seems to take a bit longer than the first two tools, but the quality it delivers was absolutely worth it.

- V0 is the first tool to absolutely nail the dark mode without returning random odd colour palettes.

- V0 offers an inspector like Bolt to target areas for prompts. Very useful especially for styling tweaks.

- Similarly to the others, when given reference content, V0 adds as much of the elements to match as possible. This will lead to a period of clutter cleanup if prototyping this way.

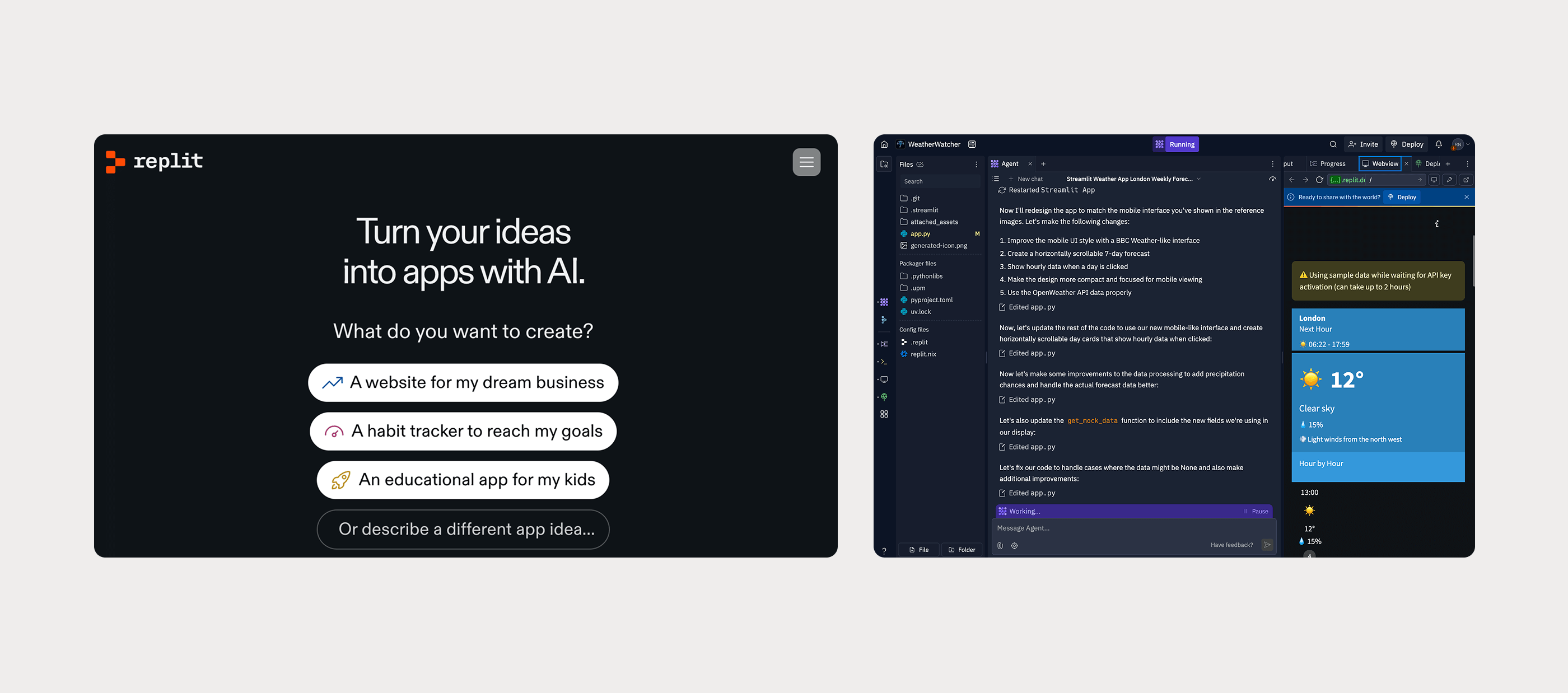

Review 4 - Replit

ChatGPT’s summary of the tool

‘Replit is an online IDE that supports rapid prototyping across dozens of languages. Its AI assistant, Ghostwriter, helps you generate, edit, and debug code in real time. It’s especially useful when prototyping backend logic, small apps, or full-stack experiments—all in the browser, without any setup. Great for technical users who want to move fast and iterate with AI support.’

Let’s see if I agree

I started to repeat the process we’ve seen for the last 3 tools above but it soon became clear Replit is approaching things differently from the others.

- Replit offered a follow up to the initial prompt asking if I wanted some additional features that I hadn't even mentioned. This was a cool and intuitive touch.

- It was also the first tool to ask if I want to connect to a real world API (for the weather forecast). It gave me clear instructions on how to sign up for and generate an API key from the 3rd party weather service. When I did so it then told me the API key might take up to 2 hours to work and told me it was going to temporarily replace this with dummy data (the default approach of the other 3 tools).

- Without prompting it also asked me directly if I needed to attach a database, something none of the others had done.

- It has a far more involved chat experience, splitting out an ‘Agent’ for the bigger jobs, and the ‘Assistant’ for the smaller tweaks which felt like overkill for my specific design focussed JTBD

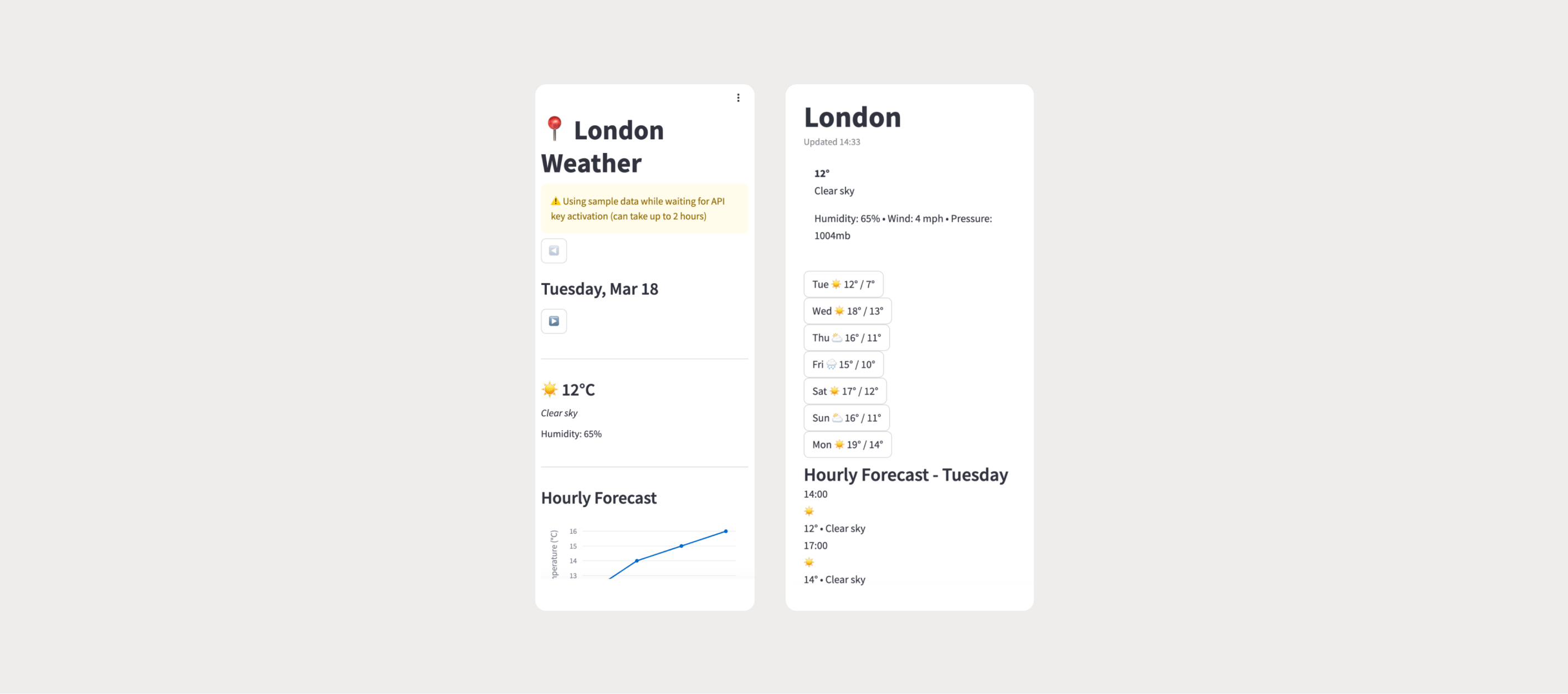

Then it generated the first preview. A completely different stylistic approach to the other 3 and very much styled like a dashboard.

Even when given the reference images as a prompt, it made no attempt to style the content in a similar way as the other 3 had. I’ve seen enough from the other 3 tools to conclude that Replit is on the more technically focussed side of the scale and probably suits more hardcore engineering focussed projects, rather than quick design prototyping for user feedback. I can see how it would be exciting to pair with an engineer to build something more involved here such as an aforementioned Wizard of Oz prototype, but for now I’m going to put it in the ‘kinda an IDE’ bucket.

That completes the review of the text prompting tools (category 2) and as we’ve discovered, Replit sits to the right of Bolt, Lovable and V0 on a sliding scale of ‘technical skill requirement’ for a user whose work isn't anchored in code on a day to day basis.

With that in mind I decided to skip testing of Cursor’s IDE because as a designer, I simply don’t need the more technically focussed environments. However I'm sure this tool provides incredible power for an engineer to explore further.

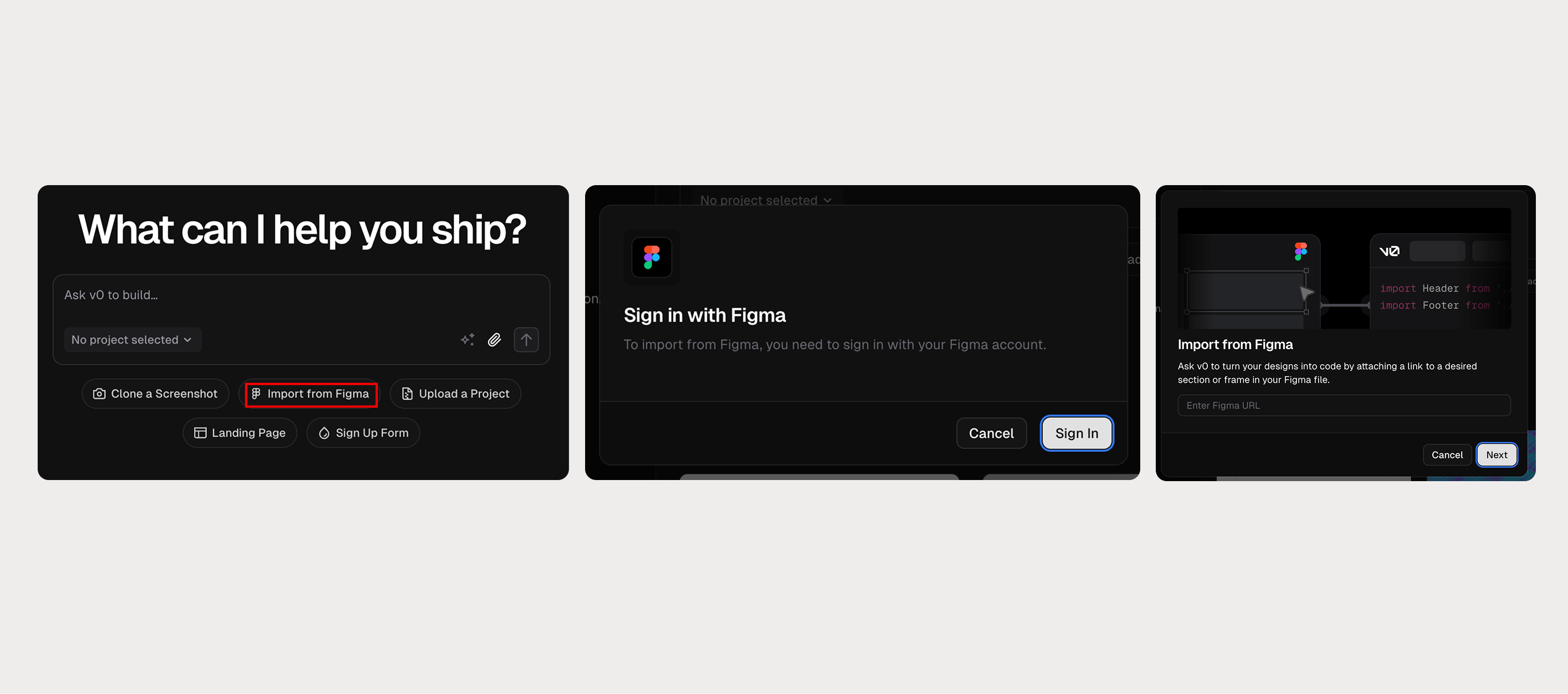

Beta: Import directly from Figma

So far we’ve looked at the following:

- Creating prototypes with text based prompting

- Creating prototypes using static images / screenshots as inspiration for the starting point

At the time of writing this, two of the tools we’ve looked at have recently released integrations directly between Figma and their interface meaning you can give even more rich information to the tools as a starting point. These integrations are currently in Beta and are likely to evolve or improve rapidly over the coming months and years, but could this be the ideal workflow for a designer? Let’s have a look.

I started by using the in-Figma ‘First Draft’ AI feature to spin up a similar weather app design. To be clear, the fact that I’ve used AI to create this starting point is not a requirement, you could just as easily begin with designs you've crafted from scratch. Now I’m going to use this to test the integrations between two of our tools.

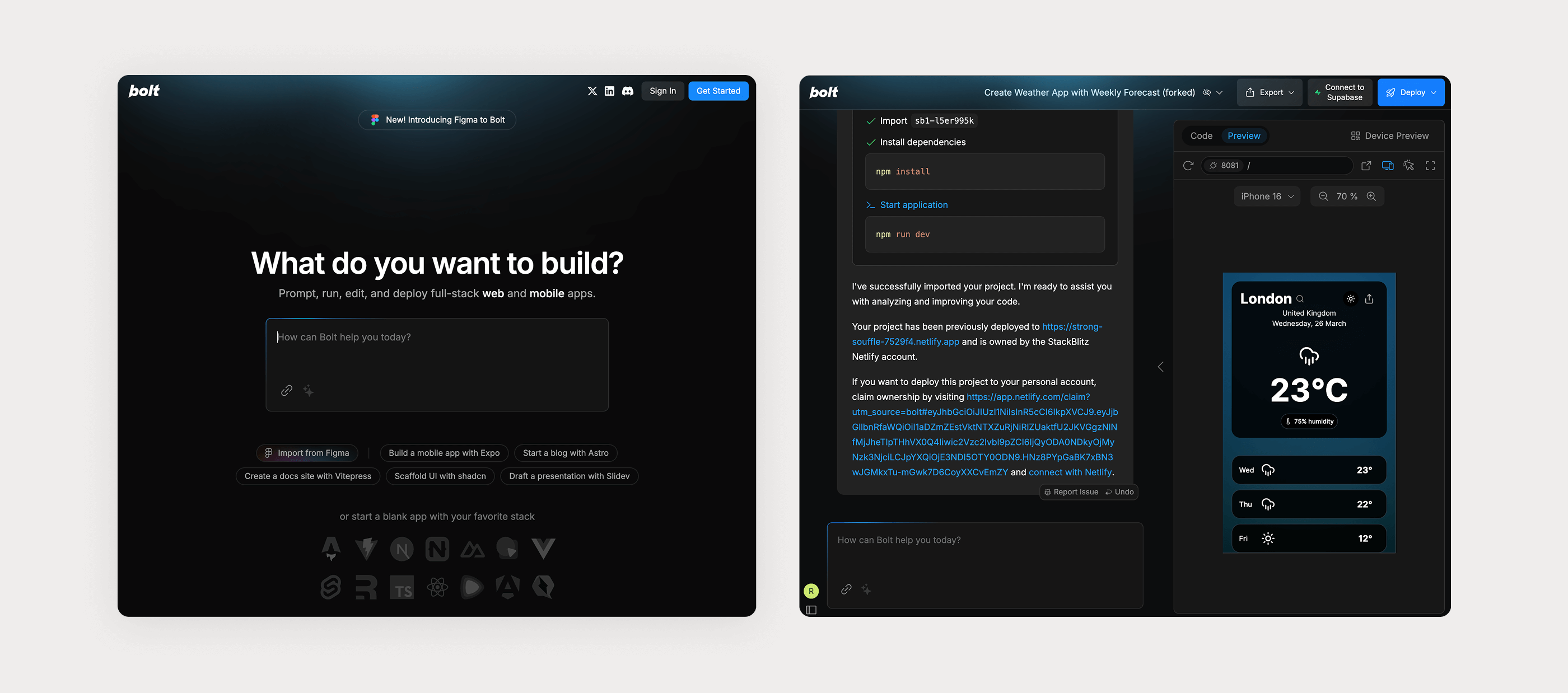

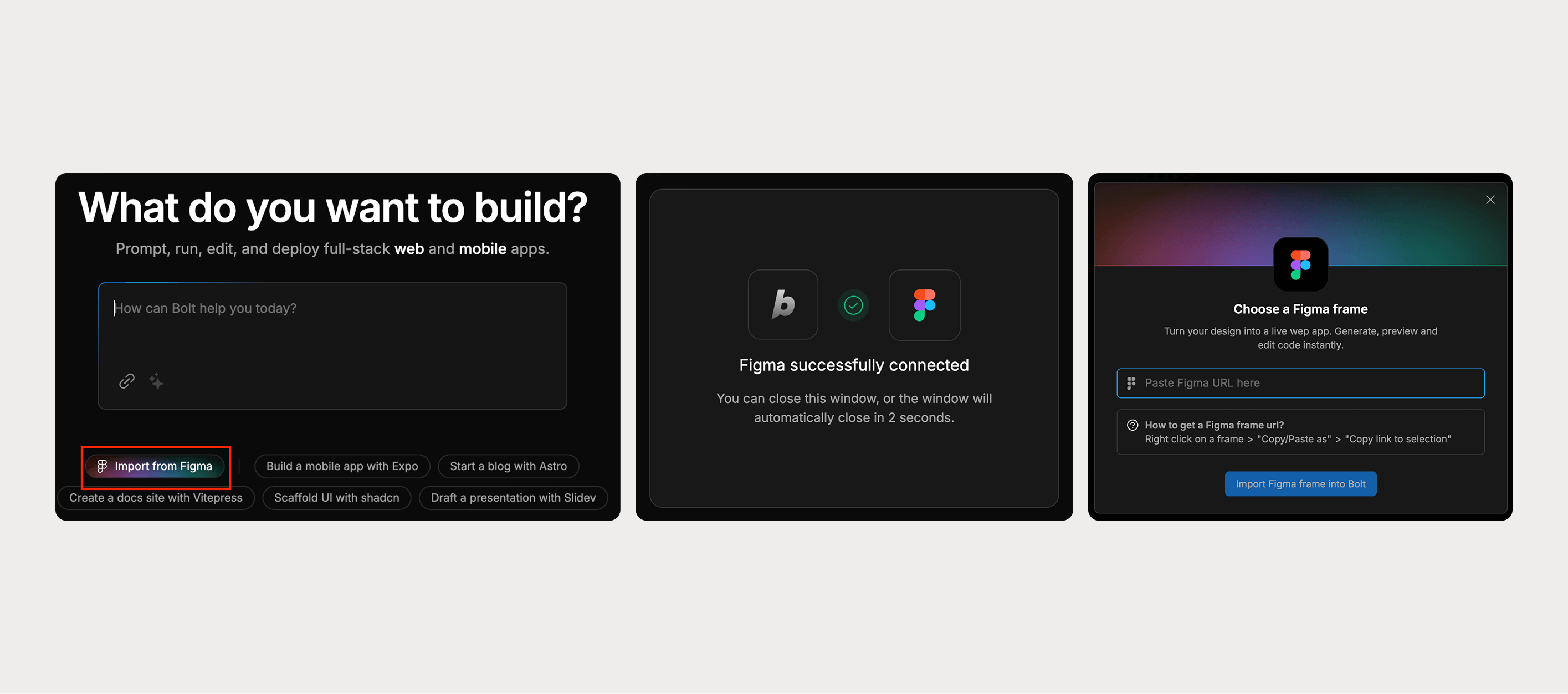

1.Figma > Bolt

- Click on the ‘Import from Figma’ link on the new prompt homepage of Bolt.new

- Follow the steps to allow access to your Figma account

- Paste your Figma URL from a frame selection directly into the modal in Bolt

Result = 8/10 The integration was the easiest of the two. Less of a faff to just paste the URL directly into the tool. And the results were a solid foundation to build on.

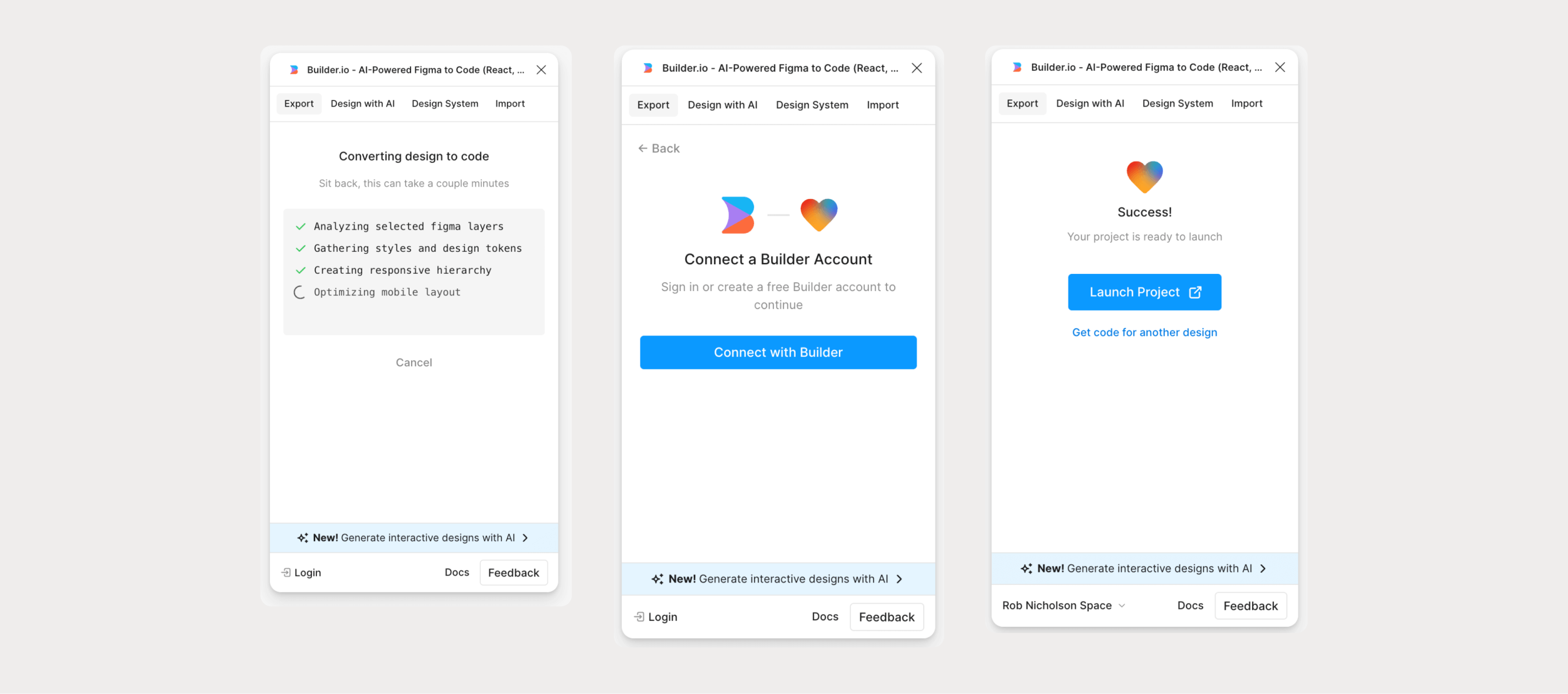

2.Figma > Lovable

- You need to spin up a free account with Builder.io in order to install the plugin into Figma. This plugin then becomes the bridge between Figma and your Lovable account.

- Once you have created and verified your Builder account, run the plugin from within Figma. Select the frame you want to build in Lovable and hit ‘Export Design’.

- Once your code is built it’ll ask you to complete the link by pointing it at your Lovable account, and hey presto! It'll launch a coded version of your page directly into a new Lovable project.

Result = 6/10 I got a reasonable result from the import but I think this was slightly biassed by the fact that the original design had been created by the AI within figma to start with. If I were to have created the design myself it seems important to adhere to a reasonable level of consistency when it comes to layer naming and autolayout discipline for instance. All of these give the AI a better chance at generating something usable and scalable.

3.Figma > V0

- The process is basically the same as the Bolt experience. Click on the ‘Import from Figma’ link on the new prompt homepage of V0

- Follow the steps to allow access to your Figma account

- Paste your Figma URL from a frame selection directly into the modal in V0

Result = 7/10 The results weren't quite as accurate as Bolt, but the process was just as simple, smooth and quick.

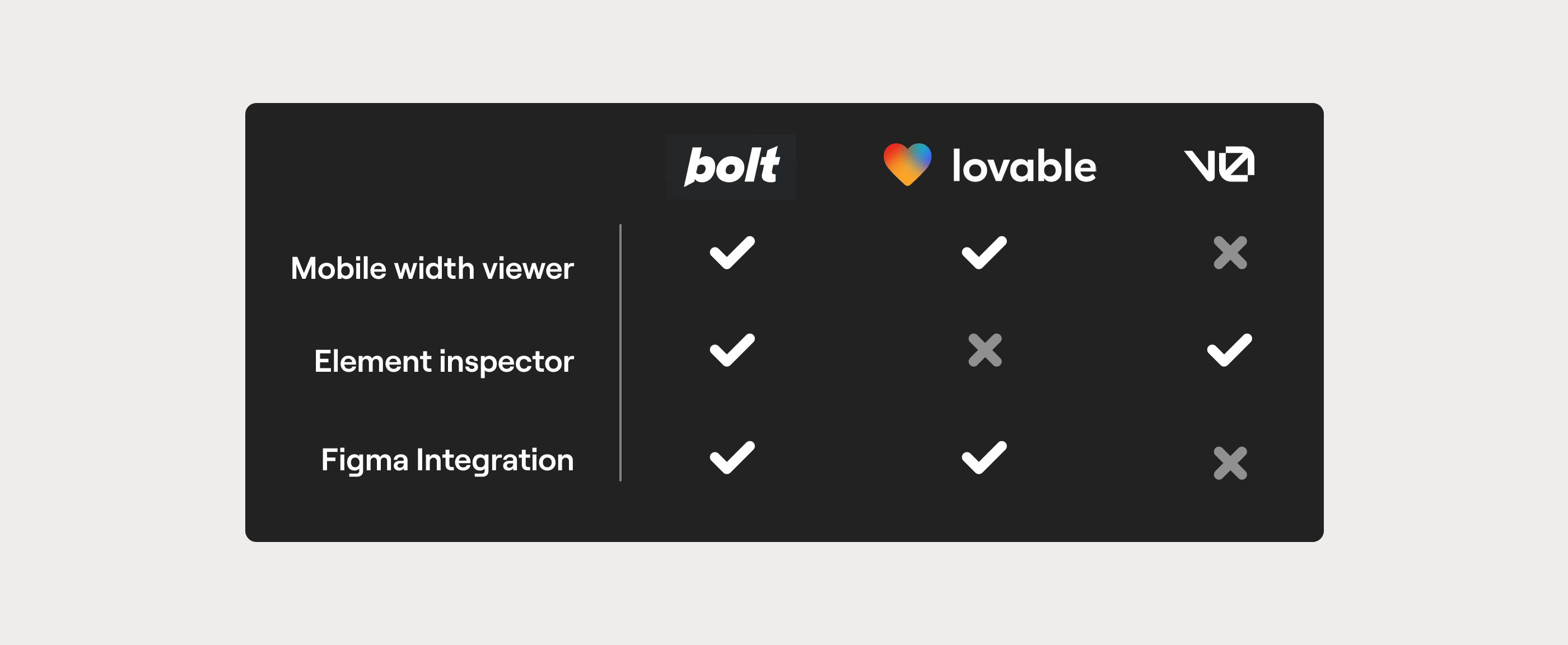

Closing thoughts

Which prompting tool would I currently use?

The tools that I have tested are all very similar given this sector is young enough that they haven't really had time to diverge into their own spaces yet. Below you can see some key features that I found useful and as things stand, Bolt is the only tool that offers all three. This is probably enough for me to prioritise Bolt for now, but I can see a workflow that involves trying all three of these tools with my first prompt, and picking the one that generates the best results. And I think the best results will vary depending on the prompt.

As a product designer, when would I use a prompting tool?

Everyone is going to have a different approach to when and how to integrate these tools into their existing workflows. For me I need to find the right balance between disrupting the current process and generating true value. Below I’ve listed five use cases where I’m confident that a prompting tool would add value for my situation as a product designer.

- Use case 1: Prototypes where user data input is a key part of the user experience (eg heavy form fields)

- Use case 2: Early phase wireframing to quickly define some core UX in a throwaway manor before the UI becomes key

- Use case 3: As a supporting handover artefact to discuss particular interactions with an engineering team

- Use case 4: As a feedback tool to define some in-house UX based on a competitor’s solution as a start point

- Use case 5: If I’m paired with an engineer and have the time and resource to create a complex Wizard of Oz prototype that requires API or database content

As a product designer, what continual professional development (CPD) could I focus on?

There’s also the age-old debate: should designers learn to code? In my experience, having even a basic understanding of HTML, CSS, and JavaScript has always been beneficial. These new tools add fresh momentum to the argument, making it easier than ever for designers, especially those who don’t work with code daily, to build foundational knowledge. Free resources like W3Schools make it incredibly accessible to get started. So why not make it a goal for your next CPD cycle if it’s not already in your repertoire.

What does the future hold?

Things are evolving fast. For example, while writing this article, Bolt released its Figma integration. Something that, when I started, only Lovable’s plugin offered.

Adopting a new workflow is already a challenge, but staying open to its rapid evolution is even harder. Yet, for now, that’s objectively the best approach.

These AI prototyping tools are currently competing on multiple fronts:

- Market fit – Interestingly, rather than the tools adapting to an existing market, the market is shifting to accommodate them. That makes this an exciting time for these companies in this space.

- Marketing strategy – They’re in an aggressive land-grab phase. Since these tools are still quite similar, early awareness and adoption will be critical differentiators.

- Product innovation – The challenge lies in striking a balance between maintaining feature parity with competitors and pushing the boundaries with genuinely valuable new capabilities.

- Partnerships & acquisitions – I’m calling it now: within the next 18-24 months, at least one of these AI prototyping tools will be acquired by an established SaaS company.

The competition is heating up, and the next year or two will shape not only these tools but also how AI-driven design workflows become an industry standard.

So is it time for you to integrate AI into your prototyping workflow?